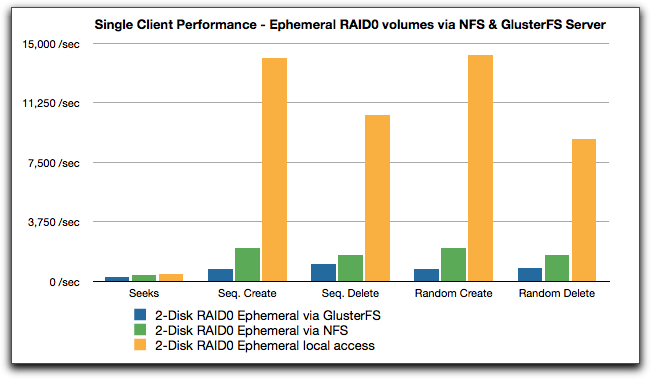

Early single-client tests of shared ephemeral storage via NFS and parallel GlusterFS

We here at BioTeam have been kicking tires and generally exploring around the edges of the new Amazon cc1.4xlarge “compute cluster” EC2 instance types. Much of our experimentation has been centered around simplistic benchmarking techniques as a way of slowly zeroing in on the methods, techniques and orchestration approaches most likely to have a significant usability, performance or wallclock-time-to-scientific-results outcome for the work we do professionally for ourselves and our clients.

We are asking very broad questions and testing assumptions along the lines of:

- Does the hot new 10 Gigabit non-blocking networking fabric backing up the new instance types really mean that “legacy” compute farm and HPC cluster architectures which make heavy use of network filesharing possible?

- How does filesharing between nodes look and feel on the new network and instance types?

- Are the speedy ephemeral disks on the new instance types suitable for bundling into NFS shares or aggregating into parallel or clustered distribtued filesystems?

- Can we use the replication features in GlusterFS to mitigate some of the risks of using ephemeral disk for storage?

- Should the shared storage built from ephermeral disk be assigned to “/scratch” or other non-critical duties due to the risks involved? What can we do to mitigate the risks?

- At what scale is NFS the easiest and most suitable sharing option? What are the best NFS server and client tuning parameters to use?

- When using parallel or cluster filesystems like GlusterFS, what rough metrics can we use to figure out how many data servers to dedicate to a particular cluster size or workflow profile?

GlusterFS & NFS Initial Testing

Over the past week we have been running tests on two types of network filesharing. We’ve only tested against a single client so obviously these results say nothing about at-scale performance or operation.

Types of tests:

- Take the pair of ~900GB ephemeral disks on the instance type, stripe them together as a RAID0 set, slap an XFS filesystem on top and export the entire volume out via NFS

- Take the pair of ~900GB ephemeral disks on the instance type, slap a single large partition on each drive, format each drive with an EXT3 filesystem and then use GlusterFS to create, mount and export the volume via the GlusterFS protocol

For each of the above two test types we repeatedly ran (at least 4x times) our standard bonnie++ benchmark tests (methodology described in the earlier blog posts). The tests were run on a single remote client that was either NFS mounting or GlusterFS mounting the file share.

GlusterFS parameters

- None really. We used the standard volume creation command and mounted the file share via the GlusterFS protocol over TCP. Eventually we want to ask some of our GlusterFS expert friends for additional tuning guidance

NFS parameters:

- Server export file: “/nfs <host>(rw,async)?”

- NFS Server config: boosted the number of nfsd daemons to 16 via edits to /etc/sysconfig/nfs file

- Client mount options: “mount -t nfs -o rw,async,hard,intr,retrans=2,rsize=32768,wsize=32768,nfsvers=3,tcp <host>:/nfs /nfs-scratch?”

Lessons Learned So Far – NFS vs GlusterFS

- GlusterFS was incredibly easy to install and creating and exporting parallel filesystem shares was straightforward. The methods involved are easily scripted/automated or built into a server orchestration strategy. The process was so simple that initially we were thinking that GlusterFS would be our default sharing option for all our work on the new compute cluster instances

- GlusterFS has ONE HUGE DOWNSIDE. It turns out that GlusterFS recommends that the participating disk volumes be formatted with an ext3 filesystem for best results. This is … problematic … with the 900GB ephemeral disks because formatting a 900 gb disk with ext3 takes damn near forever. We estimate about 15-20 minutes of wallclock time wasted while waiting for the “mkfs.ext3” command to complete.

- The wallclock time lost to formatting ext3 volumes for GlusterFS usage is significant enough to affect how we may or may not use GlusterFS in the future. Maybe there is a different filesystem we can use with a faster formatting profile. Using XFS and software RAID we can normally stand up and export filesystems in a matter of a few seconds or a minute or two. Sadly, XFS is not recommended at all with current versions of GlusterFS.

- Using GlusterFS with the recommended ext3 configuration seems to mean that we have to accept a minimum delay of 15 minutes or even more when standing up and exporting new storage. This is unacceptable for small deployments or workflows where you might only be running the EC2 instances for a short time.

- The possibility of using the GlusterFS replication features to mitigate against the risks of using ephermeral storage might be significant. We need to do more testing in this configuration.

- Given the extensive wallclock time delays inherent in waiting for ext3 filesystem formatting to complete in a GlusterFS scenario it seems likely that we might default to using a tuned NFS server setup for (a) small clusters & compute farms or (b) systems that we plan to stand up only for a few hours.

- The overhead of provisioning GlusterFS becomes less significant when we have very large clusters that can benefit from the inherent scaling ability of GlusterFS or when we plan to stand up the clusters for longer periods of time

Benchmark Results

In all the results shown below I’ve included data from a 2-disk RAID0 ephemeral storage setup. This is so that the network filesharing data can be contrasted against the results seen from running bonnie++ locally.

Click on the images for a larger version.