Note

Screwing around with the boot volume is part of our regular “explore around the edges” work before we get serious with how we are going to configure and orchestrate the new systems. The boot volume in this scenario does not have PV driver support and thus will perform slower than the actual ephemeral storage. Our need was for the boot volume to be big enough to hammer with bonnie++ – this is not something we’d do in a production scenario.

Background

All of the cloud nerds at BioTeam are thrilled now that the Amazon Compute Cluster nodes have been publicly launched. If you missed the exciting news please visit the announcement post over at the AWS blog – aws.typepad.com/aws/2010/07/the-new-amazon-ec2-instance-type-the-cluster-compute-instance.html.

We’ve been madly banging on the new instance types and trying to (initially) perform some basic low level benchmarks before we go on to the cooler benchmarks where we run actual life science and informatics pipelines against the hot new gear. Using our Chef Server, it’s trivial for us to orchestrate these new systems into working HPC clusters in just a matter of minutes. We plan to start blogging and demoing live deployment of elastic genome assembly pipelines and NextGen DNA sequencing instrument pipelines (like the Illumina software) on AWS soon.

Like James Hamilton says, the real value in these new EC2 server types lies in the non-blocking 10Gigabit ethernet network backing them up. All of a sudden our “legacy” cluster and compute farm practices that involved network filesharing among nodes via NFS, GlusterFS, Lustre and GPFS seem actually feasible rather than a sick masochistic exercise in cloud futility.

We expect to see quite a bit of news in the near future about people using NFS, pNFS and other parallel/cluster filesystems for HPC data sharing on AWS – seems like a no-brainer now that we have full bisectional 10GbE bandwidth between our Compute Cluster cc1.4xlarge instance types.

However, despite the fact that the coolest stuff is going to involve what we can do now node-to-node over the fast new networking fabric, there is still value in doing the low-level “what does this new environment look and feel like?” tests involving EBS disk volumes, S3 access and the like.

The Amazon AWS team did a great job preparing the way for people who want to quickly experiment with the new HPC instance types. The EC2 AMI images have to boot off of EBS and run under HVM virtualization rather than the standard paravirtualization used on the other instance types.

Recognizing that bootstrapping a HVM-aware EBS-booting EC2 server instance is a non-trivial exercise. AWS created a QuickStart public AMI with CentOS Linux that anyone can use right away:

The Problem—how to grow the default 20GB system disk in the QuickStart AMI?

As beautiful as the CentOS HVM AMI is, it only gives us 20GB of disk space in its native form. This is perfectly fine for most of our use cases but presented a problem when we decided we wanted to use bonnie++ to perform some disk IO benchmarks on the local boot volume to complement our tests against more traditional mounted EBS volumes and RAID0 stripe sets.

Bonnie++ really wants to work against a filesystem that is at least twice the size of available RAM so as to mitigate any memory-related caching issues when testing actual IO performance.

The cc1.4xlarge “Cluster Compute” instance comes with ~23GB of physical RAM. Thus our problem—we wanted to run bonnie++ against the local system disk, but the disk is actually smaller than the amount of RAM available to the instance!

For this one particular IO test, we really wanted a HVM-compatible AMI that had at least 50GB of storage on the boot volume.

The Solution

I was shocked and amazed to find that in about 20 minutes of screwing around with EBS snapshot sizes, instance disk partitions, and LVM settings, I was able to achieve the goal of converting the Amazon Quickstart 20GB AMI into a custom version where the system disk was 80Gb in size.

The fact that this was possible and achievable out of the box without having to debug mysterious boot failures, kernel panics, and all the other sorts of things I’m used to dealing with when messing with low level disk and partition issues, is the ultimate testament to both Amazon’s engineering prowess (how cool is it that we can launch EBS snapshots of arbitrary size?) as well as the current excellent state of Linux, Grub and LVM2.

I took a bunch of rough notes so I’d remember how the heck I managed to do this. Then I decided to clean up the notes and really document the process in case it might help someone else.

The Process

I will try to walk through step-by-step the commands and methods used to increase the system boot disk from 20GB in size to 80GB in size.

It boils down to two main steps

- Launch the Amazon QuickStart AMI but override & increase the default 20GB boot disk size

- Get the CentOS Linux OS to recognize that it’s now running on a bigger disk

You can’t do the following step using the AWS Web Management Console as the webUI does not let you alter the parameters of the block device settings. You will need the command-line EC2 utilities installed and working in order to proceed.

On the command line we can easily tell Amazon that we want to start the QuickStart AMI but instead of launching it within a 20GB snapshot of the EBS boot volume we will launch it against a much larger snapshot.

If you look at the info for AMI ami-7ea24a17 within the web page you will see this under the details for the block devices that will be available to the system at boot:

Block Devices: /dev/sda1=snap-1099e578:20:true

That is basically saying that Linux Device “/dev/sda1” will be built from EBS snapshot volume “snap-1099e578”. The next “:” delimited parameter sets the size to 20GB.

We are going to change that from 20GB to 80GB.

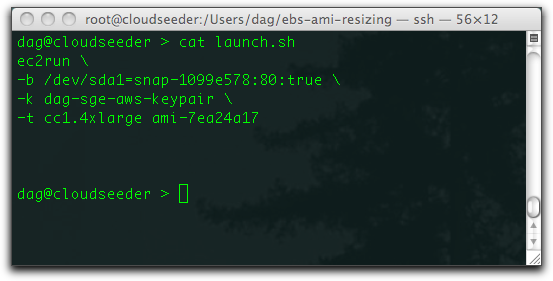

Here is the command to launch that AMI using a disk of 80GB in size instead of the default 20GB.

In the following screenshot, note how we are starting the Amazon AMI ami-7ea24a17 with a block device (the “-b” switch) that is bootstrapping itself from the snapshot “snap-1099e578”.

All we needed to do in order to make the EC2 server have a larger boot disk is pass in “80” to override the default value of 20GB. Confused? Look at the “-b” block device argument below, the 80GB is set right after the snapshot name:

Your EBS volume might take a bit longer than normal to boot up but once it is online and available you can login normally.

Of course, the system will appear to have the default 20GB system disk:

Even the LVM2 physical disk reports show the ~20GB settings:

However, if we actually use the ‘fdisk’ command to examine the disk we see that the block device is, indeed, much larger than the 20GB the Operating System thinks it has to utilize:

Fdisk tells us that disk device /dev/hda has 85.8 GB of physical capacity.

Now we need to teach the OS to make use of that space!

There are only two partitions on this disk, /dev/hda1 is the 100MB /boot partition common to RedHat varients. Lets leave that alone.

The second partition, /dev/hda2 is already set up for logical volumes under LVM. We are going to be lazy. We are going to use ‘fdisk’ to delete the /dev/hda2 partition so that we can immediately recreate it so that it spans the full remaining space on the physical drive.

After typing “fdisk /dev/hda” we type “d” and delete partition “2”. Then we type “n” for a new partition of type “p” for primary and “2” to name it as the second partition. After that we just hit return to accept the default suggestions for the begin and end of the recreated second partion.

If it all worked, we can type the “p” command to print the new partition table out.

Note how /dev/hda2 now has many more blocks? Cool!

We are not done yet. None of our partition changes have actually been written to disk yet. We still need to type “w” to write the new partition table down to disk and “q” to exit.

Obviously we can’t make live changes on a running boot disk. The new partition settings will come into effect after a system reboot.

Now we reboot the system and wait for it to come back up.

When it comes back up, don’t be alarmed that both ‘df’ and ‘pvscan’ still show the incorrect size:

We can fix that! Now we are in the realm of LVM so we need to use the “pvresize <device>” command to rescan the physical disk. Since our LVM2 partition is still /dev/hda2 that is the physical device path we give it:

Success! LVM recognizes that the drive is larger than 20GB.

With LVM aware that the disk is larger we are pretty much done. We can resize an existing logical volume or add a new one to the default Volume Group (“VolGroup00”).

Since I’m lazy AND I want to mount the extra space away from the root (“/”) volume, I chose to create a new logical volume that shares the same /dev/hda2 physical volume (“PV”) and Volume Group (“VG”).

We are going to use the command “lvcreate VolGroup00 –size 60G /dev/hda2?” to make a new 60GB logical volume that is part of the existing Volume Group named “VolGroup00”:

Success. Note that our new logical volume got assigned a default name of “lvol0” and it now exists in the LVM device path of “/dev/mapper/VolGroup00-lvol0”.

Now we need to place a Linux filesystem on our new 60GB of additional space and mount it up. Since I am a fan of XFS on EC2 I need to first install the “xfsprogs” RPM and then format the volume. A simple “yum -y install xfsprogs” does the trick and now I can make XFS filesystems on my server:

Success. We now have 60GB more space, visible to the OS and formatted with a filesystem. The final step is to mount it.

And we are done. We’ve successfully converted the 20GB Amazon QuickStart AMI into a version with a much larger boot volume.

Conclusion

None of this is rocket science. It’s actually just Linux Systems Administration 101.

The real magic here is how easily this is all accomplished on our virtual cloud system using nothing but a web browser and some command-line utilities.

What makes this process special for me is how quick and easy it was—anyone who has spent any significant amount of time managing many physical Linux server systems knows the pain and hours lost when trying to do this stuff in the real world on real (and flaky) hardware.

I can’t even count how many hours of my life I’ve lost trying to debug Grub bootloader failures, mysterious kernel panics, and other hard-to-troubleshoot booting and disk resizing efforts on production and development server systems when I’ve altered settings that we’ve covered in this post. In cluster environments, we often have to do this debugging via a 9600 baud serial console or via flaky IPMI consoles. It’s just nasty.

The fact that this method worked so quickly and so smoothly is probably only amazing to people who know the real pain of having done this in the field, crouched on the floor of a freezing cold datacenter, and trying not to pull your hair out as text scrolls slowly by at 9600 baud.

Congrats to the Amazon AWS team. Fantastic work. It’s a real win when virtual infrastructure is this easy to manipulate.