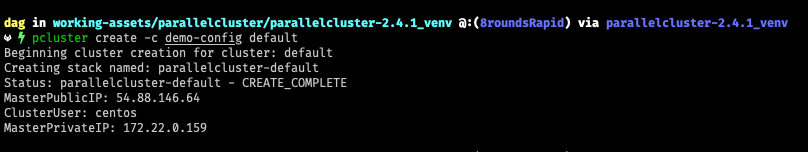

Image: Firing up a public cluster configured to deploy through a logging Squid proxy was the only way to discover all of the various Internet-based URls and endpoints that AWS Parallelcluster needs in order to successfully complete a full deployment.

AWS ParallelCluster Private Deployment in Hardened VPCs

Executive Summary

If you are struggling like me to provide a Firewall and InfoSec team with a list of external communication requirements that AWS ParallelCluster needs then skip to the bottom of this post. I had the recent chance to deploy this AWS HPC product via a logging Squid proxy and the proxy logs are super useful. Full raw log data available as well as some curated/context-aware interpretation of the destinations is provided.

Background

I’m an HPC nerd with a biology background. A significant chunk of my professional career in the life sciences has involved building, configuring, tuning, troubleshooting and operating large linux clusters acting as HPC grids to execute life science research workflows.

I loved CfnCluster and I love what CfnCluster became when it was rebranded and refactored as AWS ParallelCluster. Let me list the ways that I love this product stack:

-

-

-

-

- AWS ParallelCluster perfectly creates Linux HPC grids on AWS that look, function and operate exactly how on-premise or on-campus grids operate

- This is fantastic for existing workflows (many of which will never be rewritten or re-architected for serverless execution or converted to using only object storage for data). It also greatly minimizes the training and knowledge transfer required for an HPC cloud migration project

- This includes all the things we expect like a shared filesystem and functional MPI parallel message passing stack

-

-

-

On top of delivering a sweet HPC environment that looks and feels familiar, ParallelCluster also gives us some nice cloud features that simply don’t exist on-premise:

-

-

-

-

-

-

- Auto-scaling! My grids now shrink and expand depending on the length of the pending job list

- Spot market support! My grids can now leverage super cheap spare AWS capacity when I don’t have time sensitive workloads

- AWS FSx support! Easy integration with AWS FSx/Lustre if I need parallel scratch filesystem features

- It’s an officially supported AWS product which means Enterprise Support and Amazon TAMs are interested and will actively help support and troubleshoot issues

- The developers are amazing and super responsive on their github page

-

-

-

-

-

The General Problem

As a person who mainly does AWS work for commercial and industrial clients, my biggest overall complaint with AWS best practices, reference design architectures, tutorials, community HowTo’s and all of the evangelist activities is that they all make the same rosy colored and often inaccurate assumption that:

-

-

-

-

- Legacy workflows and requirements simply do not exist and are not worth discussing.

- Anything and Everything can, should and will be totally rebuilt and rearchitected for serverless or cloud-native design patterns

- Every user will have the necessary IAM permissions to run cloudformation stacks that may create VPCs, alter route tables, deploy Internet Gateways and attach major infrastructure crap directly to the public Internet

-

-

-

But my biggest complaint of all?

-

-

-

-

- 99% of AWS documentation, tutorials, design patterns, reference architectures and evangelist materials assume that unrestricted Internet access is always available, to all destinations for all things sitting in AWS VPC public or private subnets.

-

-

-

The operating assumption by AWS marketing, technical and PR people that everything always has unrestricted egress access to the full Internet is becoming the bane of my existence. Growing portions of the real world simply do not work this way and implementing “unrestricted internet access for all the things!” is (in my mind) a fairly significant security risk that needs to be accounted for in AWS customer InfoSec threat models.

Current HPC Situation – 100% Private VPC with API and Internet Access Blocked By Default

I can’t divulge many details but the general gist of a hardened AWS footprint for a security conscious client is this:

-

-

-

-

- Production VPCs are 100% private. There are no public-facing subnets in the standard VPC design

- No unsolicited inbound traffic from the Internet. At all. Ever.

- Systems, Servers and Lambda functions by default have no route to the Internet

- Systems, Servers and Lambda functions by default have no route to AWS API endpoints

- Lots of firewalls. Lots. Most of them are capable of SSL decrypt and payload inspection as TLS/HTTPS is becoming a major threat vector for data exfiltration, malware and botnet C&C coordination

- Access to AWS APIs or any Internet destination requires approval and an active firewall policy change

-

-

-

CfNCluster / ParallelCluster Past Versions

Prior versions of CfnCluster/ParallelCluster were fairly straightforward to operate even in our “hardened” subnets because the external communication requirements were very simple:

-

-

-

-

- ParallelCluster needs to talk to a bunch of AWS APIs for both deployment as well as ongoing operation

- Core AMIs are custom regional images and contain vast majority of software/code that needs to be brought inside

- Non-AMI data and assets like chef cookbooks used in the deployment were all stored in regional S3 buckets owned by the ParallelCluster team

- Access to EPEL repo and the OS/Patch/Update sites for CentOS/Ubuntu/Amazon Linux

- Access to Python PyPI mirror(s)

-

-

-

So that was pretty easy for us to work with. We just had to whitelist or authorize access to a known set of AWS APIs and we also had to allow HTTPS downloads from known S3 bucket locations.

The “hardest” thing was generating custom AMIs or pre_install bootstrap scripts that replaced the node OS software and EPEL repo files with versions that pointed to our local internal mirrors. Since we needed to touch files in the node OS anyway it was trivial to also install the extra SSL CA certs our firewalls required.

Simply replacing the software repo files with versions that pulled from private software mirrors reduced the external traffic requirements for ParallelCluster to “S3 downloads + APIs” which was fantastic.

Until it was not …

ParallelCluster Current Version (v2.4.1 as of this writing)

As of now I’m unable to deploy the latest version of ParallelCluster at my client because the latest version has started to require download access to undisclosed website URLs.

It took a lot of time and troubleshooting to figure out why our new HPC grid deployments failed and the cloud formation stacksets rolled back.

This was because nowhere in the Release Notes or website for the project was an announcement that new destinations were required. I’m not super angry about this because it feels like we are an edge case. Remember again — almost all cloud evangelism and design patterns make the core assumption that “internet access is always available from everything to everything” so why would anyone but a bunch of niche market wierdos care about listing out exactly what external access requirements a product like this requires?

Root cause turned out to be …

-

-

-

-

- Both master and compute nodes seem to require HTTPS access to github.com during cluster deployment

- Our firewall was killing these connection attempts via connection-reset packets

- The failure to download from github triggers the node deploy failure state

- Eventually the Cloudformation timeout is hit and the entire grid does a rollback and self-deletion

-

-

-

Documenting All ParallelCluster Communication Requirements

I finally had the time to stand up a logging Squid proxy server in a personal AWS account and do a full AWS ParallelCluster deployment through the proxy so I could log all of the HTTPS traffic coming from both the Master and Compute HPC nodes.

Sorting the squid logs was all I needed to generate an API and destination list for our firewall team.

This is not super useful or interesting data but maybe it will help someone else who also needs to work with a Firewall team on managing outbound traffic and API traffic.

Full Raw Proxy Logs From ParallelCluster Deployment

If you want a full .txt file showing all of the Squid proxy logs for a 3-node CentOS-7 ParallelCluster deployment in the US-EAST-1 region then just click this download link:

[download id=”4191″]

Deduplicated List Of All Destinations From ParallelCluster Deployment

If you just want a unique set of external URLs with duplication removed, here is the parsed version of the full squid log:

autoscaling.us-east-1.amazonaws.com:443 cloudformation.us-east-1.amazonaws.com:443 d2lzkl7pfhq30w.cloudfront.net:443 dynamodb.us-east-1.amazonaws.com:443 ec2.us-east-1.amazonaws.com:443 epel.mirror.constant.com:443 github.com:443 http://mirror.cs.pitt.edu/epel/7/x86_64/repodata/060e3733e53cb4498109a79991d586acf17b7f8e3ea056b2778363f774ad367b-primary.sqlite.bz2 http://mirror.cs.pitt.edu/epel/7/x86_64/repodata/733703470dd10accd9234492acb0c74de09f91772c2767107f6b0fa567cbc26a-updateinfo.xml.bz2 http://mirror.cs.pitt.edu/epel/7/x86_64/repodata/repomd.xml http://mirror.es.its.nyu.edu/epel/7/x86_64/repodata/060e3733e53cb4498109a79991d586acf17b7f8e3ea056b2778363f774ad367b-primary.sqlite.bz2 http://mirror.es.its.nyu.edu/epel/7/x86_64/repodata/733703470dd10accd9234492acb0c74de09f91772c2767107f6b0fa567cbc26a-updateinfo.xml.bz2 http://mirror.es.its.nyu.edu/epel/7/x86_64/repodata/a73afa039fca718643fde7cf3bae07fc4f8f295e61ee9ea00ec2bb01c06d9fb0-comps-Everything.x86_64.xml.gz http://mirror.es.its.nyu.edu/epel/7/x86_64/repodata/repomd.xml http://mirrorlist.centos.org/? http://mirrors.advancedhosters.com/centos/7.7.1908/extras/x86_64/repodata/e87982a7d437db5420d7a3821de1bd4a7b145d57a64a3a464cdeed198c4c1ad5-primary.sqlite.bz2 http://mirrors.advancedhosters.com/centos/7.7.1908/extras/x86_64/repodata/repomd.xml http://mirrors.advancedhosters.com/centos/7.7.1908/os/x86_64/repodata/04efe80d41ea3d94d36294f7107709d1c8f70db11e152d6ef562da344748581a-primary.sqlite.bz2 http://mirrors.advancedhosters.com/centos/7.7.1908/os/x86_64/repodata/4af1fba0c1d6175b7e3c862b4bddfef93fffb84c37f7d5f18cfbff08abc47f8a-c7-x86_64-comps.xml.gz http://mirrors.advancedhosters.com/centos/7.7.1908/os/x86_64/repodata/repomd.xml http://mirrors.advancedhosters.com/centos/7.7.1908/updates/x86_64/repodata/9bdf7f17529d8072d5326d82e4e131a18d122462fb5104bed71eb5f7a0dd3ef6-primary.sqlite.bz2 http://mirrors.advancedhosters.com/centos/7.7.1908/updates/x86_64/repodata/repomd.xml http://s3.amazonaws.com/ec2metadata/ec2-metadata iad.mirror.rackspace.com:443 mirror.umd.edu:443 mirrors.fedoraproject.org:443 pypi.org:443 queue.amazonaws.com:443 us-east-1-aws-parallelcluster.s3.amazonaws.com:443 yum.repos.intel.com:443

Context & Category Aware ParallelCluster Destinations:

AWS API and S3 Storage Endpoints:

cloudformation.us-east-1.amazonaws.com:443 autoscaling.us-east-1.amazonaws.com:443 dynamodb.us-east-1.amazonaws.com:443 ec2.us-east-1.amazonaws.com:443 queue.amazonaws.com:443 http://s3.amazonaws.com/ec2metadata/ec2-metadata us-east-1-aws-parallelcluster.s3.amazonaws.com:443

Other Destinations (Not API, Not S3, Not OS or Package Repo)

github.com:443

CentOS Mirrors, EPEL Mirrors and Python PyPi Mirrors

Note: This is the hardest category from a Firewall perspective. You can see below that these destinations are all CDN networks or redirecting mirror sites that can end up sending your download clients to a different set of download destinations on every request or every cluster deployment. Our use of internal mirrors for downloads of this type solves this issue neatly for us as I can ignore these log entries.

d2lzkl7pfhq30w.cloudfront.net:443 http://mirror.cs.pitt.edu/epel/7/x86_64/repodata/060e3733e53cb4498109a79991d586acf17b7f8e3ea056b2778363f774ad367b-primary.sqlite.bz2 http://mirror.cs.pitt.edu/epel/7/x86_64/repodata/733703470dd10accd9234492acb0c74de09f91772c2767107f6b0fa567cbc26a-updateinfo.xml.bz2 http://mirror.cs.pitt.edu/epel/7/x86_64/repodata/repomd.xmlhttp://mirror.es.its.nyu.edu/epel/7/x86_64/repodata/060e3733e53cb4498109a79991d586acf17b7f8e3ea056b2778363f774ad367b-primary.sqlite.bz2 http://mirror.es.its.nyu.edu/epel/7/x86_64/repodata/733703470dd10accd9234492acb0c74de09f91772c2767107f6b0fa567cbc26a-updateinfo.xml.bz2 http://mirror.es.its.nyu.edu/epel/7/x86_64/repodata/a73afa039fca718643fde7cf3bae07fc4f8f295e61ee9ea00ec2bb01c06d9fb0-comps-Everything.x86_64.xml.gz http://mirror.es.its.nyu.edu/epel/7/x86_64/repodata/repomd.xml http://mirrorlist.centos.org/?http://mirrors.advancedhosters.com/centos/7.7.1908/extras/x86_64/repodata/e87982a7d437db5420d7a3821de1bd4a7b145d57a64a3a464cdeed198c4c1ad5-primary.sqlite.bz2 http://mirrors.advancedhosters.com/centos/7.7.1908/extras/x86_64/repodata/repomd.xmlhttp://mirrors.advancedhosters.com/centos/7.7.1908/os/x86_64/repodata/04efe80d41ea3d94d36294f7107709d1c8f70db11e152d6ef562da344748581a-primary.sqlite.bz2 http://mirrors.advancedhosters.com/centos/7.7.1908/os/x86_64/repodata/4af1fba0c1d6175b7e3c862b4bddfef93fffb84c37f7d5f18cfbff08abc47f8a-c7-x86_64-comps.xml.gz http://mirrors.advancedhosters.com/centos/7.7.1908/os/x86_64/repodata/repomd.xm lhttp://mirrors.advancedhosters.com/centos/7.7.1908/updates/x86_64/repodata/9bdf7f17529d8072d5326d82e4e131a18d122462fb5104bed71eb5f7a0dd3ef6-primary.sqlite.bz2 http://mirrors.advancedhosters.com/centos/7.7.1908/updates/x86_64/repodata/repomd.xml http://s3.amazonaws.com/ec2metadata/ec2-metadata iad.mirror.rackspace.com:443 yum.repos.intel.com:443

What My Firewall Team Actually Needs

Thankfully I don’t have to worry about OS, EPEL or Python mirrors on the internet that may use a constantly changing set of mirror hosts or CDN locations. All of our OS, Update, Patch and Software Package repos are hosted on internal private mirrors.

So in my case I can strip all destinations related to CentOS, EPEL and Python PyPI mirrors because our HPC grids are customized so the sources of those assets always point to internal private mirrors.

So the final set of info that my Firewall team actually needs to see is this curated list which basically boils down to “APIs + S3 downloads + Github downloads”

cloudformation.us-east-1.amazonaws.com:443 autoscaling.us-east-1.amazonaws.com:443 dynamodb.us-east-1.amazonaws.com:443 ec2.us-east-1.amazonaws.com:443 queue.amazonaws.com:443 s3.amazonaws.com/ec2metadata/ec2-metadata:80 us-east-1-aws-parallelcluster.s3.amazonaws.com:443 github.com:443

The above list will be applied to a dynamic firewall policy triggered by special Tags assigned to the EC2 hosts so we don’t have to worry about source IP firewall rules or subnet-wide VPC firewall rules.

Hope this may prove helpful to someone else dealing with a similar problem! Obviously my API destinations are region-specific so you’ll need to adjust accordingly if you are operating outside of US-EAST-1.